This is a bit of a painful one; I’ve inherited a “content control” system which is essentially a vast number of ascx files generated outside of the development team, outside of version control, and dumped directly onto the webservers. These did not have to be in the project because the site is configured with batch=”false”.

I had been given the requirement to implement dynamic content functionality within the controls.

These ascx files are referenced directly by a naming convention within a container aspx page to LoadControl(“~/content/somecontent.ascx”) and render within the usual surrounding master page. Although I managed to get this close to pulling them all into a document db and creating a basic CMS instead, unfortunately I found an even more basic method of using existing ascx files and allowing newer ones to have dynamic content.

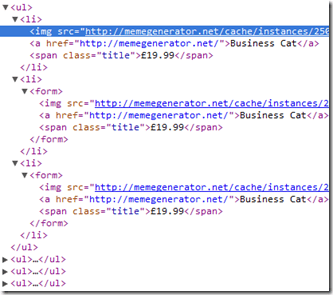

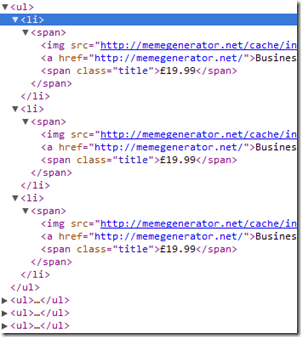

An example content control might look something like:

[html]

<%@ Control %>

<div>

<ul>

<li>

<span>

<img src="http://memegenerator.net/cache/instances/250×250/8/8904/9118489.jpg" style="height:250px;width:250px;" />

<a href="http://memegenerator.net/">Business Cat</a>

<span class="title">£19.99</span>

</span>

</li>

<li>

<span>

<img src="http://memegenerator.net/cache/instances/250×250/8/8904/9118489.jpg" style="height:250px;width:250px;" />

<a href="http://memegenerator.net/">Business Cat</a>

<span class="title">£19.99</span>

</span>

</li>

<li>

<span>

<img src="http://memegenerator.net/cache/instances/250×250/8/8904/9118489.jpg" style="height:250px;width:250px;" />

<a href="http://memegenerator.net/">Business Cat</a>

<span class="title">£19.99</span>

</span>

</li>

</ul>

</div>

[/html]

One file, no ascx.cs (these are written outside of the development team, remember). There are a couple of thousand of them, so I couldn’t easily go through and edit them to all. How to now allow dynamic content to be injected with minimal change?

I started off with a basic little class to allow content injection to a user control:

[csharp]

public class Inject : System.Web.UI.UserControl

{

public DynamicContent Data { get; set; }

}

[/csharp]

and the class for the data itself:

[csharp]

public class DynamicContent

{

public string Greeting { get; set; }

public string Name { get; set; }

public DateTime Stamp { get; set; }

}

[/csharp]

Then how to allow data to be injected only into the new content files and leave the heaps of existing ones untouched (until I can complete the business case documentation for a CMS and get budget for it, that is)? This method should do it:

[csharp]

private System.Web.UI.Control RenderDataInjectionControl(string pathToControlToLoad, DynamicContent contentToInject)

{

var control = LoadControl(pathToControlToLoad);

var injectControl = control as Inject;

if (injectControl != null)

injectControl.Data = contentToInject;

return injectControl ?? control;

}

[/csharp]

Essentially, get the control, attempt to cast it to the Inject type, if the cast works inject the data and return the cast version of the control, else just return the uncast control.

Calling this with an old control would just render the old control without issues:

[csharp]const string contentToLoad = "~/LoadMeAtRunTime_static.ascx";

var contentToInject = new DynamicContent { Greeting = "Hello", Name = "Dave", Stamp = DateTime.Now };

containerDiv.Controls.Add(RenderDataInjectionControl(contentToLoad, contentToInject));

[/csharp]

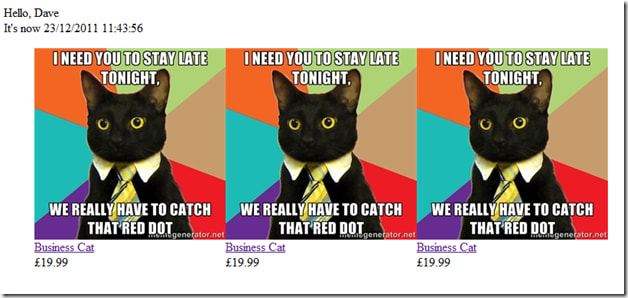

Now we can create a new control which can be created dynamically:

[html highlight=”1″]

<%@ Control CodeBehind="Inject.cs" Inherits="CodeControl_POC.Inject" %>

<div>

<%=Data.Greeting %>, <%=Data.Name %><br />

It’s now <%= Data.Stamp.ToString()%>

</div>

<div>

<ul>

<li>

<span>

<img src="http://memegenerator.net/cache/instances/250×250/8/8904/9118489.jpg" style="height:250px;width:250px;" />

<a href="http://memegenerator.net/">Business Cat</a>

<span class="title">£19.99</span>

</span>

</li>

<li>

<span>

<img src="http://memegenerator.net/cache/instances/250×250/8/8904/9118489.jpg" style="height:250px;width:250px;" />

<a href="http://memegenerator.net/">Business Cat</a>

<span class="title">£19.99</span>

</span>

</li>

<li>

<span>

<img src="http://memegenerator.net/cache/instances/250×250/8/8904/9118489.jpg" style="height:250px;width:250px;" />

<a href="http://memegenerator.net/">Business Cat</a>

<span class="title">£19.99</span>

</span>

</li>

</ul>

</div>

[/html]

The key here is the top line:

[html highlight=”1″]

<%@ Control CodeBehind="Inject.cs" Inherits="CodeControl_POC.Inject" %>

[/html]

Since this now defines the type of this control to be the same as our Inject class it gives us the same thing, but with a little injected dynamic content

[csharp]

const string contentToLoad = "~/LoadMeAtRunTime_dynamic.ascx";

var contentToInject = new DynamicContent { Greeting = "Hello", Name = "Dave", Stamp = DateTime.Now };

containerDiv.Controls.Add(RenderDataInjectionControl(contentToLoad, contentToInject));

[/csharp]

Just a little something to help work with legacy code until you can complete your study of which CMS to implement ![]()

Comments welcomed.