In the beginning, there was chaos

No doubt you’ve worked in a company where progression within your I.T. department, as a developer, is not easy to explain nor measure

Perhaps there is a skills matrix which defines a “senior developer” as someone who does all the stuff you do “but better”. Or this listing might contain things that are not pertinent to your technical role and have quite obviously been copy-pasted from a generic skills matrix template for another company member, or even perhaps from your manager’s previous company which might be in a barely related sector.

I only really noticed this once I became a manager (the peter principle) and was faced with giving feedback to obviously skilled developers and was unable to explain what they needed to do to progress; for me, this was lesson #1 in management: either have good answers to good questions, or find out the answers.

After a lot of internet hunting myself and a colleague in a similarly frustrating situation found a selection of insightful articles from Joel Spolsky about FogCreek developers, SWEBOK, and a Construx whitepaper on exactly these things; defining how to grade a developer within your company, which allowed us to develop our similar system to explain;

- how to give complete transparency to each member of your team,

- how to collate your team members grades into a “team” grading system in order to identify potential weaknesses,

- how to adapt the system to specific roles and requirements.

I’ll try my best to give some sort of structure to this article and in doing so hopefully cover each of the points above.

From chaos, came the desire for structure

Following are the steps we took in our attempt to achieve a better grading model..

Recognise the problem in the department

This was the easy part, and essentially was covered in my introduction. The classic “how come he’s a Senior Dev when he’s rubbish at X and I’m great at that and I’m a Mid level Dev?!” question was enough to realise that the existing system didn’t really fit since it couldn’t give a good answer.

Conduct a survey of all developers to identify what constitutes a developer’s role and responsibilities within the company

This required us to use the I.T. intranet (a Sharepoint portal) to request feedback from every developer in the company (about 35-40 people at the time) on what they believe their job requires of them; i.e., their key skills, abilities, and responsibilities that enable them to fulfil their role. This took quite a lot of convincing to get enough responses given the lack of conviction in the existing system, but we got there.

Find a group of peers to review the outputs and group them into similar genres

This involved getting a peer group together (in this case the 5 or 6 tech leads) who were committed to dedicating time each week, regularly meeting to discuss all of the responses and group them into genres. We were very fortunate that the development team as a whole took the process on board and really delivered some quality comments. The actual results of the request for feedback, already grouped into headings (but not deduped) are below:

Standards

- Adherence to coding standards.

- Adherence to industry and company standards and policies

- Follows industry best practice

- Writing code that consistently meets standards (Dev and Architecture).

- Writes clean looking code

Refactoring

- Improve and maintain code quality.

- Refactor code where necessary

- Takes time to go back and refactor own code

Deployment

- Ability to deploy to project environments.

- Manage the deployment of code to environment

Environment

- Environment management (deployments scripts and configurations)

- Manage the setting up of the development environment

- Has knowledge outside of development (understands how production works, how systems are administered)

- Have knowledge of the systems and how the different services/processors etc interact and their dependencies.

Merge/Branch

- Ability to merge code and understanding of the merge/branch process.

- Can merge code between branches accurately and reliably.

- Configuration management (TFS)

- Understands the branching model and how merges work.

Documentation

- Writes clean looking code

- Creates clear and concise sets of documentation around projects worked on for technical and non-technical audiences.

- Documentation – ability to produce clear documentation for both IT and non-IT people.

- Documentation (comments in code, UML specifications, SharePoint items)

- Writes clean, self-documenting code that is well structured, simple and documented appropriately.

Debugging

- Can the root cause of a bug through various layers of application and is able to fix typical issues at all of these layers.

Agile/Scrum

- Good knowledge of Agile Scrum

- Understands Agile methodologies in general and knows how we use Scrum.

Company Systems/Domain Knowledge

- Ability to realistically estimate tasks.

- Has a good level of understanding of the Company systems, what each system does (high level) and how they interact with each other.

- Knowledge of processes used by the business users of the system.

- Has knowledge outside of development (understands how production works, how systems are administered)

- Have knowledge of the systems and how the different services/processors etc interact and their dependencies.

Coding

- Coding in C# (3.5/4.0)

- Functional testing (BDD, planning, development, execution, evaluation)

- Good knowledge of sql and adherence to sql standards.

- Good understanding of requirements.

- Confident developing applications at client, server and database level. i.e. JavaScript, C# and T-SQL.

- Knowledge about the frequently used parts of the .NET framework

- Knowledge and practice of TDD.

- Proficient in multiple languages (to understand different styles and methods of coding)

- Unit testing (TDD, mocking, coverage assessment)

- Use of C#.net and vb.net.

- Use of front end technologies – jquery, json etc.

- Writes clean looking code

Patterns & Practices/ Design Patterns

- Design Patterns (the most used or important ones)

Estimation/Planning

- Estimation of work items

- Input into planning of how tasks are to be carried out – what technologies can be used.

- Knowledge of processes used by the business users of the system.

Presentation

- Presentation (and other knowledge dissemination skills)

Leadership

- Coaching

- Interviewing

- Meeting facilitation

- Mentoring

Learning

- Interest in gaining experience in new technologies.

- Learning and research

Process

- Interest and involvement in improving the software development process and the company policies

This gave us 16 reasonably distinct areas to focus on which the group sanitised slightly and ended up with 12 “Knowledge Areas”: Debugging, Standards, Coding, Design Patterns, Documentation, Leadership, Domain Knowledge, R&D, Agile/Scrum, Presentation, Branch/Merge, Environment, and Process.

Within each genre, attempt to define distinct levels of ability

Each member of the group took an area each per week and attempted to define a few distinct levels of ability; it was easier for some than others, and some actual level descriptions were more verbose than others. We had agreed initially to use the levels Beginner, Basic, Good, Great, and Superstar (the latter being an overachiever), however it became apparent that defining five distinct levels was pretty tricky for some of the areas so this became Basic, Good, Great (and if possible we added in Superstar too).

Here are a couple of the example areas with their levels defined

Documentation

Basic

Updates where necessary

Good

Create support documents

Great

Creates project handover docs, support docs, implementation docs

Superstar

“Technical Author”: takes ownership, adds missing artefacts, “tech lead assistant” (that’s not meant to sound patronising, honest!)

Domain Knowledge

Basic

Knows where to find the implementation of functionality specific to the area being developed within the codebase/database. Understands the structure and separation of the projects within a given solution and where to make necessary changes in order to implement required functionality

Good

Can identify where software artefacts exists within the domain and utilise these in order to reduce code repetition. Can identify which areas of functionality being developed should be reusable artefacts

Great

Uses extensive knowledge of the system under development and other systems it may interact with to determine various potential implementations for a given requirement, defines the impact of each on each affected system, and uses this to propose the best solution(s)

Superstar

Can suggest fundamental changes to the domain model as the requirements for functionality and development evolve, in order to better fit.

Entire developer group reviews the outcome; feedback and rewrite where necessary

Once all of these areas have at least 3 levels of ability defined, the entire product is shared with the development team for honest feedback. The ability levels can be altered at this point, but the main aim is to get buy in and agreement from the majority of the development team.

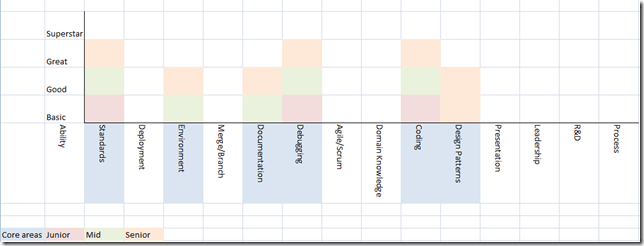

Define “Core” Knowledge Areas

Each company will no doubt have a selection of key abilities for which each developer grade must have at least a minimum rating. Another couple of sessions with the peer group decided what those were for the company:

Junior Dev

- Standards (Basic)

- Debugging (Basic)

- Coding (Basic)

Mid Dev

- Standards (Good)

- Debugging (Good)

- Coding (Good)

- Design Patterns (Basic)

- Documentation (Basic)

- Environment (Basic)

Senior Dev

- Standards (Great)

- Debugging (Great)

- Coding (Great)

- Design Patterns (Good)

- Documentation (Good)

- Environment (Good)

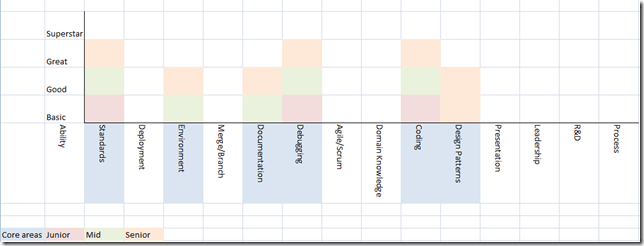

Below is a high level template where the coloured blocks define the core level template for that grade; mauve for junior, green for mid, and peach for senior (please forgive my inability to choose decent colours in Excel).

Attempt to define a template for the various levels of developer in the company

Once the Knowledge Areas are defined and the ability levels agreed to, the next step is to try and work out what constitutes the Developer grades within the company. To do this the group took a couple of steps:

Firstly, I unofficially applied the rating system to a couple of my team; one of which I thought of as a classic Senior Dev and one as a classic Mid Dev; once I had worked through this new review process with them we had a pretty good idea of which Knowledge Areas and at what level what a mid and a senior dev should have within the company.

Meanwhile, within the group we worked out a selection of minimum levels in varying groups of knowledge areas, differing per developer grade. For example,

- Senior Developer: 4 great or higher, 8 good or higher, 10 basic or higher

- Mid Developer: 2 great or higher, 4 good or higher, 6 basic or higher

- Junior Developer: 2 good or higher, 6 basic or higher

A breakdown of coding ability per language, weighted appropriately for the specific role (i.e., UI dev, regular dev, backend dev, sql dev, etc).

Coding is a tricky one, so we started breaking that right down and making it a little more specific. For example, the devs working on the website though not UX devs would certainly require good jQuery and CSS knowledge, but perhaps less WCF and C# multithreading. As such, a role could define a language matrix, such as this:

In the example above, a dev would be rated on their abilities in C# (weighted at 60% of the total), VB.net (20%), HTML (10%), and jQuery (10%). The example dev scored a 3 out of 5 in C# ability, 3 for VB, 2 for HTML and 2 for jQuery, giving them a score of 2.8. Therefore the Knowledge Area “Coding” would be able to have a coding language minimum score for each ability level. Perhaps a Junior would need a minimum of 2, Mid 3, and Senior 4.

Review process becomes a case of identifying/checking off each entry in the various levels of each genre.

Once this system is agreed by all members of the team, an annual appraisal becomes a much easier case of looking at the dev’s current charts, noting the areas that they’re not scoring highly on, and creating objectives which allow them to focus on improving those areas in order to progress through the ranks.

Anyone overachieving (superstar status) could be temporarily given a bonus of some type; attending more seminars/webinars/events or a salary increase in between their current salary and that of the next pay grade (a great idea from the fogcreek article).

Any items missing must be taken care of within a set time period

If the dev is not achieving what their job title says then they can be given a deadline in which to raise their rating in the areas they are deficient. Easy.

Transparency of what is required in order to be put forward to promotion

No more “why is he a Senior and I’m not” questions. It’s all out there for everyone in the team to see.

Supporting general guidelines for attitude, punctuality etc. cover off talented but unprofessional developers

Add in general “Code of Conduct” checklist so that you don’t end up with “superstars” who are complete…ly unprofessional. For example:

Professional Conduct

- Team Player

- Meets Deadlines

- Organisational Skills

- Communication Skills

- Approachability

- Leadership Ability

- Interaction With Team Members

- Interaction With External Departments BA’s etc

- Attendance

- Time Keeping

- Quality of Work

Varying levels of detail in the graph can make them specific for the individual (dev-team lead), the team (team lead/line manager), and the department (line manager/head of I.T.)

By overlaying all the charts for all the members in your team you end up with a team chart; useful for deciding which team best fits which new project, which team needs what training, what skills the next interviewee should be tested on in order to help fill a skills gap, etc.

Overlay the various teams’ charts and you end up with a department chart; good for the less tekky but more senior I.T. members.

Use the new data to identify potential new roles

We quickly realised that someone with Superstar status in Documentation and general high-mid dev ratings should think about moving into a Tech Author role (which previously didn’t exist). If they excel in Presentation, Estimation, and Agile/Scrum then perhaps focus on becoming a Scrum Master. Awesome at Design Patterns, Coding, Leadership? Become a Tech Lead.

Being able to help people identify not only where they’re weak but also where they’re strong helps to both build a solid foundation and give direction for progression.

Conclusion

As far as I’m aware, this process was accepted within the company (I left shortly after it’s introduction and have since learned that another, completely different, method has been “introduced” from up on high..) and I found it much easier giving appraisals when there was a peer review system helping define people’s grades.

It also gave my team specific areas in which to define SMART objectives, and there was a general increase in enthusiasm for this process.

None of this could have been possible without the articles listed below, and the dedication of the Tech Lead team members, determined to make life better for all developers.