Given that I just said you need to repair Resharper in order to escape a weird cloud service project compilation error, what if you’re having trouble running the repair tool itself?

Resharper installer

After clicking Repair – Bummer

Given that I just said you need to repair Resharper in order to escape a weird cloud service project compilation error, what if you’re having trouble running the repair tool itself?

Every now and then I’ll find that Visual Studio just blows up – seemingly without me having changed anything – throwing the error:

A numeric comparison was attempted on "$(TargetPlatformVersion)" that evaluates to "" instead of a number, in condition "'$(TargetPlatformVersion)' > '8.0'".

Where the number at the end changes every so often.

How to fix this? Annoyingly simple.

Repair Resharper and restart Visual Studio.

Hope that saves you a few hours..!

Want your own NuGet repo? Don’t want to pay for MyGet or similar?

Here’s how I’ve done it recently at Mailcloud (over an espresso that didn’t even have time to get cold – it’s that easy)

Creating your own nuget server could barely be any easier than it is now.

It’ll look something like this afterwards:

For the API key: I just grabbed mine from newguid.com:

If you’re using Azure and you selected the “Create remote resources” back at the start when creating the project, you can just push this straight out to the newly created website with a right click on the project -> publish :

Or use powershell, or msbuild to webdeploy, or ftp it somewhere, or keep it local – your call, buddy!

And that’s the hard part done 🙂

If you haven’t configured an API key then the first visit page will alert you to this.

This is done in the usual manner – don’t forget your API key:

Let’s reference our shiny new nuget repo:

Edit your Package Manager settings and add in a new source, using your new repo:

Now you can open Package Manager window or console and find your pushed nuget package:

When working with shared functionality across multiple .Net projects and team members, historically your options are limited to something like;

There are several problems with these such as;

So how can you get around this pain?

I’m glad you asked.

The ruby language has had this problem solved for many, many years – since around 2004, in fact.

Using the gem command you could install a ruby package from a central location into your project, along with all dependencies, e.g.:

gem install rails --include-dependenciesThis one would pull down rails as well as packages that rails itself depended on.

You could search for gems, update your project’s gems, remove old versions, and remove the gem from your project entirely; all with minimal friction. No more scouring the internets for information on what to download, where to get it from, how to install it, and then find out you need to repeat this for a dozen other dependent packages!

You use a .gemspec file to define the contents and meta data for your gem before pushing the gem to shared repository.

Even ruby gems were borne from a frustration that the ruby ecosystem wasn’t supported as well as Perl; Perl had CPAN (Comprehensive Perl Archive Network) for over a DECADE before ruby gems appeared – it’s been up since 1995!

If Perl had CPAN since 1995, and ruby had gems since 2005, where is the .Net solution?

I’d spent many a project forgetting where I downloaded PostSharp from or RhinoMocks, and having to repeat the steps of discovery before I could even start development; leaving the IDE in order to browse online, download, unzip, copy, paste, before referencing within the IDE, finding there were missing dependencies, rinse, repeat.

Around mid-2010 Dru Sellers and gang (including Rob Reynolds aka @ferventcoder) built the fantastic “nu[bular]” project; this was itself a ruby gem, and could only be installed using ruby gems; i.e., to use nu you needed to install ruby and rubygems.

Side note: Rob was no stranger to the concept of .Net gems and has since created the incredible Chocolatey apt-get style package manager for installing applications instead of just referencing packages within your code projects, which I’ve previously waxed non-lyrical about

Once installed you were able to pull down and install .Net packages into your projects (again, these were actually just ruby gems). At the time of writing it still exists as a ruby gem and you can see the humble beginnings and subsequent death (or rather, fading away) of the project over on its homepage (this google group).

I used this when I first heard about it and found it to be extremely promising; the idea that you can centralise the package management for the .Net ecosystem was an extremely attractive proposition; unfortunately at the time I was working at a company where introducing new and exciting (especially open source) things was generally considered Scary™. However it still had some way to go.

In October 2006 Nu became Nu v2, at which point it became NuPack; The Epic Trinity of Microsoft awesomeness – namely Scott Guthrie, Scott Hanselman, and Phil Haack – together with Dave Ebbo and David Fowler and the Nubular team took a mere matter of months to create the first fully open sourced project that was central to an MS product – i.e., VisualStudio which was accepted into the ASP.Net open source gallery in Oct 2006

It’s referred to as NuPack in the ASP.MVC 3 Beta release notes from Oct 6 2010 but underwent a name change due to a conflict with an existing product, NUPACK from Caltech.

There was a vote, and if you look through the issues listed against the project in codeplex you can see some of the other suggestions.

(Notice how none of the names available in the original vote are “NuGet”..)

Finally we have NuGet! The associated codeplex work item actually originally proposed “Nugget”, but that was change to NuGet.

Essentially the same as a gem; an archive with associated metadata in a manifest file (.nuspec for nuget, .gemspec for gems). It’s blindingly simple in concept, but takes a crapload of effort and smarts to get everything working smoothly around that simplicity.

All of the details for creating a package are on the NuGet website.

We decided to use MyGet initially to kick off our own private nuget feed (but will migrate shortly to a self-hosted solution most likely; I mean, look at how easy it is! Install-Package NuGet.Server, deploy, profit!)

The only slight complexity was allowing the private feed’s authentication to be saved with the package restore information; I’ll get on to this shortly as there are a couple of options.

Once you’ve created a project that you’d like to share across other projects, it’s simply a matter of opening a prompt in the directory where your csproj file lives and running:

nuget specto create the nuspec file ready for you to configure, which looks like this:

<?xml version="1.0"?>

<package >

<metadata>

<id>$id$</id>

<version>$version$</version>

<title>$title$</title>

<authors>$author$</authors>

<owners>$author$</owners>

<licenseUrl>http://LICENSE_URL_HERE_OR_DELETE_THIS_LINE</licenseUrl>

<projectUrl>http://PROJECT_URL_HERE_OR_DELETE_THIS_LINE</projectUrl>

<iconUrl>http://ICON_URL_HERE_OR_DELETE_THIS_LINE</iconUrl>

<requireLicenseAcceptance>false</requireLicenseAcceptance>

<description>$description$</description>

<releaseNotes>Summary of changes made in this release of the package.</releaseNotes>

<copyright>Copyright 2014</copyright>

<tags>Tag1 Tag2</tags>

</metadata>

</package>Fill in the blanks and then run:

nuget pack YourProject.csprojto end up with a .nupkg file in your working directory.

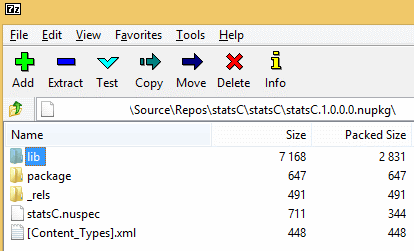

As previously mentioned, this is just an archive. As such you can open it yourself in 7Zip or similar and find something like this:

Your compiled dll can be found in the lib dir.

If you’re using MyGet then you can upload your nupkg via the MyGet website directly into your feed.

If you like the command line, and I do like my command line, then you can use the nupack command to do this for you:

nuget push MyPackage.1.0.0.nupkg <your api key> -Source https://www.myget.org/F/<your feed name>/api/v2/packageOnce this has completed your package will be available at your feed, ready for referencing within your own projects.

If you’re using a feed that requires authentication then there are a couple of options.

Edit your NuGet sources (Options -> Package Manager -> Package Sources) and add in your main feed URL, e.g.

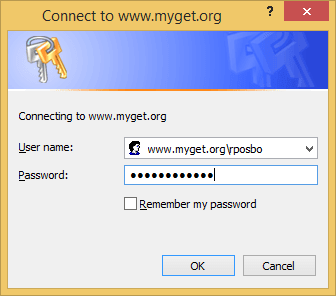

http://www.myget.org/F/<your feed name>/If you do this against a private feed then an attempt to install a package pops up a windows auth prompt:

This will certainly work locally, but you may have problems when using a build server such as teamcity or VisualStudio Online due to the non-interactive authentication.

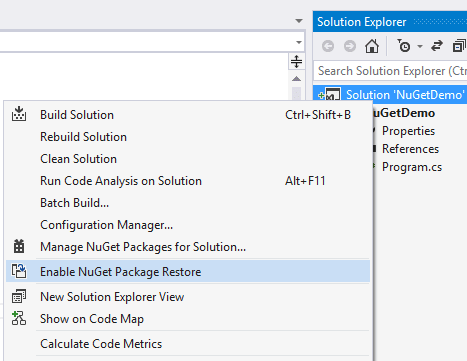

One solution to this is to actually include your password (in plain text – eep!) in your nuget.config file. To do this, right click your solution and select “Enable Package Restore”.

This will create a .nuget folder in your solution containing the nuget executable, a config file and a targets file. Initially the config file will be pretty bare. If you edit it and add in something similar to the following then your package restore will use the supplied credentials for the defined feeds:

<?xml version="1.0" encoding="utf-8"?>

<configuration>

<solution>

<add key="disableSourceControlIntegration" value="true" />

</solution>

<packageSources>

<clear />

<add key="nuget.org" value="https://www.nuget.org/api/v2/" />

<add key="Microsoft and .NET" value="https://www.nuget.org/api/v2/curated-feeds/microsoftdotnet/" />

<add key="MyFeed" value="https://www.myget.org/F/<feed name>/" />

</packageSources>

<disabledPackageSources />

<packageSourceCredentials>

<MyFeed>

<add key="Username" value="myusername" />

<add key="ClearTextPassword" value="mypassword" />

</MyFeed>

</packageSourceCredentials>

</configuration>So we resupply the package sources (need to clear them first else you get duplicates), then add a packageSourceCredentials section with an element matching the name you gave your packageSource in the section above it.

Alternative Approach

Don’t like plain text passwords? Prefer auth tokens? Course ya do. Who doesn’t? In that case, another option is to use the secondary feed URL MyGet provides instead of the primary one, which contains your auth token (which can be rescinded at any time) and looks like:

https://www.myget.org/F/<your feed name>/auth/<auth token>/Notice the extra “auth/blah-blah-blah” at the end of this version.

NuGet as a package manager solution is pretty slick. And the fact that it’s open sourced and can easily be self-hosted internally means it’s an obvious solution for managing those shared libraries within your project, personal or corporate.

Having seen this blog post about setting up a development Linux VM in a recent Morning Brew, I had to have a shot at doing it all in a script instead, since it looked like an awful lot of hard work to do it manually.

The post I read covers downloading and installing VirtualBox (which could be scripted also, using the amazing Chocolatey) and then installing Ubuntu, logging in to the VM, downloading and installing Chrome, SublimeText2, MonogDB, Robomongo, NodeJs, NPM, nodemon, and mocha.

Since all of this can be handled via apt-get and a few other cunning configs, here’s my attempt using Vagrant. Firstly, vagrant init a directory, then paste the following into the Vagrantfile:

[bash]

Vagrant.configure(2) do |config|

config.vm.box = "precise32"

config.vm.box_url = "http://files.vagrantup.com/precise32.box"

end

[/bash]

Now create new file in the same dir as the Vagrantfile (since this directory is automatically configured as a shared folder, saving you ONE ENTIRE LINE OF CONFIGURATION), calling it something like set_me_up.sh. I apologise for the constant abuse of > /dev/null – I just liked having a clear screen sometimes..:

[bash]#!/bin/sh

clear

echo "******************************************************************************"

echo "Don’t go anywhere – I’m going to need your input shortly.."

read -p "[Enter to continue]"

### Set up dependencies

# Configure sources & repos

echo "** Updating apt-get"

sudo apt-get update -y > /dev/null

echo "** Installing prerequisites"

sudo apt-get install libexpat1-dev libicu-dev git build-essential curl software-properties-common python-software-properties -y > /dev/null

### deal with intereactive stuff first

## needs someone to hit "enter"

echo "** Adding a new repo ref – hit Enter"

sudo add-apt-repository ppa:webupd8team/sublime-text-2

echo "** Creating a new user; enter some details"

## needs someone to enter user details

sudo adduser developer

echo "******************************************************************************"

echo "OK! All done, now it’s the unattended stuff. Go make coffee. Bring me one too."

read -p "[Enter to continue]"

### Now the unattended stuff can kick off

# For mongo db – http://docs.mongodb.org/manual/tutorial/install-mongodb-on-ubuntu/

echo "** More prerequisites for mongo and chrome"

sudo apt-key adv –keyserver hkp://keyserver.ubuntu.com:80 –recv 7F0CEB10 > /dev/null

sudo sh -c ‘echo "deb http://downloads-distro.mongodb.org/repo/ubuntu-upstart dist 10gen" | sudo tee /etc/apt/sources.list.d/mongodb.list’ > /dev/null

# For chrome – http://ubuntuforums.org/showthread.php?t=1351541

wget -q -O – https://dl-ssl.google.com/linux/linux_signing_key.pub | sudo apt-key add –

echo "** Updating apt-get again"

sudo apt-get update -y > /dev/null

## Go, go, gadget installations!

# chrome

echo "** Installing Chrome"

sudo apt-get install google-chrome-stable -y > /dev/null

# sublime-text

echo "** Installing sublimetext"

sudo apt-get install sublime-text -y > /dev/null

# mongo-db

echo "** Installing mongodb"

sudo apt-get install mongodb-10gen -y > /dev/null

# desktop!

echo "** Installing ubuntu-desktop"

sudo apt-get install ubuntu-desktop -y > /dev/null

# node – the right(?) way!

# http://www.joyent.com/blog/installing-node-and-npm

# https://gist.github.com/isaacs/579814

echo "** Installing node"

echo ‘export "PATH=$HOME/local/bin:$PATH"’ >> ~/.bashrc

. ~/.bashrc

mkdir ~/local

mkdir ~/node-latest-install

cd ~/node-latest-install

curl http://nodejs.org/dist/node-latest.tar.gz | tar xz –strip-components=1

./configure –prefix=~/local

make install

# other node goodies

sudo npm install nodemon > /dev/null

sudo npm install mocha > /dev/null

## shutdown message (need to start from VBox now we have a desktop env)

echo "******************************************************************************"

echo "**** All good – now quitting. Run *vagrant halt* then restart from VBox to go to desktop ****"

read -p "[Enter to shutdown]"

sudo shutdown 0

[/bash]

The gist is here, should you want to fork and edit it.

You can now open a prompt in that directory and run

[bash]

vagrant up && vagrant ssh

[/bash]

which will provision your VM and ssh into it. Once connected, just execute the script by running:

[bash]

. /vagrant/set_me_up.sh

[/bash]

(/vagrant is the shared directory created for you by default)

Let’s break this up a bit. First up, I decided to group together all of the apt-get configuration so I didn’t need to keep calling apt-get update after each reconfiguration:

[bash]

# Configure sources & repos

echo "** Updating apt-get"

sudo apt-get update -y > /dev/null

echo "** Installing prerequisites"

sudo apt-get install libexpat1-dev libicu-dev git build-essential curl software-properties-common python-software-properties -y > /dev/null

### deal with intereactive stuff first

## needs someone to hit "enter"

echo "** Adding a new repo ref – hit Enter"

sudo add-apt-repository ppa:webupd8team/sublime-text-2

[/bash]

Then I decided to set up a new user, since you will be left with either the vagrant user or a guest user once this script has completed; and the vagrant one doesn’t have a desktop/home nicely configured for it. So let’s create our own one right now:

[bash]

echo "** Creating a new user; enter some details"

## needs someone to enter user details

sudo adduser developer

echo "******************************************************************************"

echo "OK! All done, now it’s the unattended stuff. Go make coffee. Bring me one too."

read -p "[Enter to continue]"

[/bash]

Ok, now the interactive stuff is done, let’s get down to the installation guts:

[bash]

### Now the unattended stuff can kick off

# For mongo db – http://docs.mongodb.org/manual/tutorial/install-mongodb-on-ubuntu/

echo "** More prerequisites for mongo and chrome"

sudo apt-key adv –keyserver hkp://keyserver.ubuntu.com:80 –recv 7F0CEB10 > /dev/null

sudo sh -c ‘echo "deb http://downloads-distro.mongodb.org/repo/ubuntu-upstart dist 10gen" | sudo tee /etc/apt/sources.list.d/mongodb.list’ > /dev/null

# For chrome – http://ubuntuforums.org/showthread.php?t=1351541

wget -q -O – https://dl-ssl.google.com/linux/linux_signing_key.pub | sudo apt-key add –

echo "** Updating apt-get again"

sudo apt-get update -y > /dev/null

[/bash]

Notice the URLs in there referencing where I found out the details for each section.

The only reason these config sections are not at the top with the others is that they can take a WHILE and I don’t want the user to have to wait too long before creating a user and being told they can go away. Now we’re all configured, let’s get installing!

[bash]

## Go, go, gadget installations!

# chrome

echo "** Installing Chrome"

sudo apt-get install google-chrome-stable -y > /dev/null

# sublime-text

echo "** Installing sublimetext"

sudo apt-get install sublime-text -y > /dev/null

# mongo-db

echo "** Installing mongodb"

sudo apt-get install mongodb-10gen -y > /dev/null

# desktop!

echo "** Installing ubuntu-desktop"

sudo apt-get install ubuntu-desktop -y > /dev/null

[/bash]

Pretty easy so far, right? ‘Course it is. Now let’s install nodejs on linux the – apparently – correct way. Well it works better than compiling from source or apt-getting it.

[bash]

# node – the right(?) way!

# http://www.joyent.com/blog/installing-node-and-npm

# https://gist.github.com/isaacs/579814

echo "** Installing node"

echo ‘export "PATH=$HOME/local/bin:$PATH"’ >> ~/.bashrc

. ~/.bashrc

mkdir ~/local

mkdir ~/node-latest-install

cd ~/node-latest-install

curl http://nodejs.org/dist/node-latest.tar.gz | tar xz –strip-components=1

./configure –prefix=~/local

make install

[/bash]

Now let’s finish up with a couple of nodey lovelies:

[bash]

# other node goodies

sudo npm install nodemon > /dev/null

sudo npm install mocha > /dev/null

[/bash]

All done! Then it’s just a case of vagrant halting the VM and restarting from Virtualbox (or edit the Vagrantfile to include a line about booting to GUI); you’ll be booted into an Ubuntu desktop login. Use the newly created user to log in and BEHOLD THE AWE.

Enough EPICNESS, now the FAIL…

The original post also installs Robomongo for mongodb administration, but I just couldn’t get that running from a script. Booo! Here’s the script that should have worked; please have a crack and try to sort it out! qt5 fails to install for me which then causes everything else to bomb out.

[bash]

# robomongo

INSTALL_DIR=$HOME/opt

TEMP_DIR=$HOME/tmp

# doesn’t work

sudo apt-get install -y git qt5-default qt5-qmake scons cmake

# Get the source code from Git. Perform a shallow clone to reduce download time.

mkdir -p $TEMP_DIR

cd $TEMP_DIR

sudo git clone –depth 1 https://github.com/paralect/robomongo.git

# Compile the source.

sudo mkdir -p robomongo/target

cd robomongo/target

sudo cmake .. -DCMAKE_BUILD_TYPE=Release -DCMAKE_INSTALL_PREFIX=$INSTALL_DIR

make

make install

# As of the time of this writing, the Robomongo makefile doesn’t actually

# install into the specified install prefix, so we have to install it manually.

mkdir -p $INSTALL_DIR

mv install $INSTALL_DIR/robomongo

mkdir -p $HOME/bin

ln -s $INSTALL_DIR/robomongo/bin/robomongo.sh $HOME/bin/robomongo

# Clean up.

rm -rf $TEMP_DIR/robomongo

[/bash]

Not only is there the gist, but the whole shebang is over on github too.

ENJOOOYYYYY!

If you’ve ever had a basic html website (or not so basic site, but with static html pages in it nonetheless) and eventually got around to migrating to an extensionless URL framework (e.g. MVC), then you may have had to think about setting the correct redirects so as to not dilute your SEO rating.

This is my recent adventure in doing just that within an MVC5 site taking over a selection of html pages from an older version of the site

As your site is visited and crawled it will build up a score and a reputation among search engines; certain pages may appear on the first page for certain search terms. This tends to be referred to as “search juice”. Ukk.

If you change your structure you can do one of the following:

Forget about the old ones; users might be annoyed if they’ve bookmarked something (but seriously, who bookmarks anything anymore?..), but also any reputation and score you have with the likes of Google will have to start again from scratch.

This is obviously the easiest option.

Dead as a dodo; gives an HTTP404:

http://mysite.com/aboutme.html

Alive and kickiiiiing:

http://mysite.com/aboutme

Good for humans, but in the short term you’ll be diluting your SEO score for that resource since it appears to be two separate resources (with the score split between them).

Both return an HTTP200 and display the same content as each other:

http://mysite.com/aboutme.html

http://mysite.com/aboutme

Should you want to do this in MVC, firstly you need to capture the requests for .html files. These aren’t normally handled by .Net since there’s no processing to be done here; you might as well be processing .js files, or .css, or .jpg.

For .Net to capture requests for html I have seen most people use this abomination:

[xml]

<modules runAllManagedModulesForAllRequests="true" />

[/xml]

This will cause every single request to be captured and go through the .Net pipeline, even though there’s most likely nothing for it to do. Waste of processing power, and of everyone’s time.

Using this as reference I discovered you can define a handler to match individual patterns:

[xml]

<add name="HtmlFileHandler" path="*.html" verb="GET"

type="System.Web.HandlersTransferRequestHandler"

preCondition="integratedMode,runtimeVersionv4.0" />

[/xml]

Pop that in your

In your Route.config you could add something along the lines of:

[csharp]

routes.MapRoute(

name: "Html",

url: "{action}.html",

defaults: new { controller = "Home", action = "Index" }

);

[/csharp]

This will match a route such as /aboutme.html and send it to the Home controller’s aboutme action – make sure you have the matching actions for each page, e.g.:

[csharp]

public ActionResult AboutUs()

{

return View();

}

public ActionResult Contact()

{

return View();

}

public ActionResult Help()

{

return View();

}

[/csharp]

Or just use a catch all route …

[csharp]

routes.MapRoute(

name: "Html",

url: "{page}.html",

defaults: new { controller = "Home", action = "SendOverThere" }

);

[/csharp]

and the matching catch all ActionResult to just display the View:

[csharp]

public ActionResult SendOverThere(string page)

{

return View(page); // displays the view with the name <page>.cshtml

}

[/csharp]

CORRECT ANSWER!

To preserve the aforementioned juices, you need to set up RedirectResults instead of ActionResults for each page on your controller which return a PermanentRedirect, e.g.:

[csharp]

public RedirectResult AboutUs()

{

return RedirectPermanent("/AboutUs");

}

[/csharp]

Or use that catch all route …

[csharp]

routes.MapRoute(

name: "Html",

url: "{page}.html",

defaults: new { controller = "Home", action = "SendOverThere" }

);

[/csharp]

… and action:

[csharp]

public RedirectResult SendOverThere(string page)

{

return RedirectPermanent(page);

}

[/csharp]

Using the Attribute Routing in MVC5 you can do something similar and simpler, directly on your controller – no need for a Route.Config entry:

[csharp]

[Route("{page}.html")]

public RedirectResult HtmlPages(string page)

{

return RedirectPermanent(page);

}

[/csharp]

Just add the line below into your Route.Config

[csharp]

routes.MapMvcAttributeRoutes();

[/csharp]

This routing always drives me crazy. I find it extremely hard to debug routing problems; which is why I’d like to point you towards Phil Haack’s RouteDebugger nuget package and the accompanying article

or even Glimpse.

Both of these have the incredible ability to analyse any URL you enter against any routes you’ve set up, telling you which ones hit (if any). This helped me no end when I finally discovered my .html page URL was being captured, but it was looking for an action of “aboutus.html” on the controller “home”.

Superscribe is an open source project by @roysvork that uses graph based routing to implement unit testable, fluent, routing, which looks really clever. I’ll give that a shot for my next project.

Good luck, whichever route you take!

(see what I did there?..)

Creating functionality in Azure to subscribe to messages on a queue is so 2013. You have to set up a service bus, learn about queues, topics, maybe subscribers and filters, blah, BLAH, BLAH.

Let’s not forget configuring builds and deployments and all that.

Fun though this may be, what if you want to have just the equivalent of a cronjob or scheduled task kicking off every few hours or days for some long-running or CPU-intensive task

Well, sure, you could create a VM, log in, and configure cron/scheduled tasks. However, all the cool kids are using webjobs instead these days. Get with the programme, grandpa! (Or something)

It depends. Each version is the equivalent of a small console app that lives in the ether (i.e., on some company’s server in a rainy field in Ireland), but how it kicks off the main functionality is different.

These webjobs can be deployed as part of a website, or ftp-ed into a specific directory; we’ll get onto this a bit later. Once there, you have an extra dashboard available which gives you a breakdown of the job execution history.

It uses the Microsoft.WindowsAzure.Jobs assemblies and can be best installed via nuget:

Install-Package Microsoft.WindowsAzure.Jobs.Host -Pre(Notice the “-Pre”: this item is not fully cooked yet. It might need a few more months before you can safely eat it without fear of intestinal infrastructure blowout)

Let’s check out the guts of that assembly shall we?

We’ll get onto these attributes momentarily; essentially, these allow you to decorate a public method for the job host to pick up and execute when the correct event occurs.

Here’s how the method will be called:

Notice that all of the other demos around at the moment not only use RunAndBlock, but they don’t even use the CancellationToken version (if you have a long running process, stopping it becomes a lot easier if you’re able to expose a cancellation token to another – perhaps UI – thread).

Right now you have limited options for deploying a webjob.

For each option you firstly need to create a new Website. Then click the WebJobs (Preview) tab at the top. Click Add at the bottom.

Now it gets Old Skool.

Zip the contents of your app’s bin/debug folder, call it WebJob.zip (I don’t actually know if the name matters though).

Enter the name for your job and browse to the zip file to upload.

You can choose; run continuously, run on a schedule (if you have Azure Scheduler Preview enabled), and run on demand.

There’s a great article on asp.net covering this method.

Webjobs are automagically picked up via convention from a particular directory structure. As such, you can choose to ftp (or other deployment method, perhaps via WebDeploy within the hosting website itself, a git commit hook, or a build server) the files that would have been in the zip into:

site\wwwroot\App_Data\jobs\{job type}\{job name}

What I’ve discovered doing this is that the scheduled jobs are in fact actually triggered jobs, which means that they are actually triggered via an HTTP POST from the Azure Scheduler.

There’s a nice intro to this over on Amit Apple’s blog

Remember those attributes?

One version can be configured to monitor a Storage Queue (NOT a service bus queue, as I found out after writing an entire application to do this, deploy it, then click around the portal for an entire morning, certain I was missing a checkbox somewhere).

By using the attribute [QueueInput] (and optionally [QueueOutput]) your method can be configured to automatically monitor a Storage Queue, and execute when the appropriate queue has something on it.

A method with the attribute [BlobInput] (and optionally [BlobOutput]) will kick off when a blob storage container has something uploaded into it.

Woah there!

Yep, that’s right. Just by using a reference to an assembly and a couple of attributes you can shortcut the entire palava of configuring azure connections to a namespace, a container, creating a block blob reference, and/or a queue client, etc; it’s just there.

Crazy, huh?

When you upload your webjob you are assigned a POST endpoint to that job; this allows you to either click a button in the Azure dashboard to execute the method, or alternatively execute it via an HTTP POST using Basic Auth (automatically configured at the point of upload and available within the WebJobs tab of your website).

You’ll need a [NoAutomaticTrigger] or [Description] attribute on this one.

If you successfully manage to sign up for the Azure Scheduler Preview then you will have an extra option in your Azure menu:

Where you can even add a new schedule:

This isn’t going to add a new WebJob, just a new schedule; however adding a new scheduled webjob will create one of these implicitly.

In terms of attributes, it’s the same as On Demand.

Remember those methods?

These require the Main method of your non-console app to instantiate a JobHost and “runandblock”. The job host will find a matching method with key attribute decorations and depending on the attributes used will fire the method when certain events occur.

[csharp]

static void Main()

{

JobHost h = new JobHost();

h.RunAndBlock();

}

public static void MyAwesomeWebJobMethod(

[BlobInput("in/{name}")] Stream input,

[BlobOutput("out/{name}")] Stream output)

{

// A new cat picture! Resize all the things!

}

[/csharp]

Scott Hanselman has a great example of this.

Using some basic reflection you can look at the class itself (in my case it’s called “Program”) and get a reference to the method you want to call such that each execution just calls that method and stops.

[csharp]

static void Main()

{

var host = new JobHost();

host.Call(typeof(Program).GetMethod("MyAwesomeWebJobMethod"));

}

[NoAutomaticTrigger]

public static void MyAwesomeWebJobMethod()

{

// go find epic cat pictures and send for lulz.

}

[/csharp]

I needed to add in that [NoAutomaticTrigger] attribute, otherwise the webjob would fail completely due to no valid method existing.

WebJobs are fantastic for those offline, possibly long running tasks that you’d rather not have to worry about implementing in a website or a cloud service worker role.

I plan to use them for various small functions; at Mailcloud we already use a couple to send the sign-ups for the past few days to email and hipchat each morning.

Have a go with the non-scheduled jobs, but if you get the chance to use Azure Scheduler it’s pretty cool!

Good luck!

Oh, what’s that? You need me to process a huge file and spew out the data plus its Base64? I’d better write a new .Net proje-OH NO WAIT A MINUTE I DON’T HAVE TO ANY MORE!

ScriptCS is C# from the command line; no project file, no visual studio (.. no intellisense..), but it’s perfect for little hacks like these.

First, go and install Chocoloatey package manager:

c:> @powershell -NoProfile -ExecutionPolicy unrestricted -Command "iex ((new-object net.webclient).DownloadString('https://chocolatey.org/install.ps1'))" && SET PATH=%PATH%;%systemdrive%\chocolatey\binThen use chocolatey to install scriptcs:

cinst scriptcsThen open your text editor of choice (I used SublimeText2 for this one) and write some basic C# plus references, save it as e.g. hasher.csx:

[csharp]

using System;

using System.Text;

using System.IO;

using System.Collections.Generic;

var sourceFile = @"C:\Users\rposbo\import.txt";

var destinationFile = @"C:\Users\rposbo\export.txt";

var lines = File.ReadAllLines(sourceFile);

var newOutput = new List<string>();

foreach(var line in lines){

var hash = Convert.ToBase64String(Encoding.Default.GetBytes(line));

newOutput.Add(string.Format("{0},{1}",line,hash));

}

File.WriteAllLines(destinationFile, newOutput.ToArray());

[/csharp]

Then run that from the command line:

c:\Users\rposbo\> scriptcs hasher.csxAnd you’ll end up with your output; minimal fuss and effort.

This past week I’ve been lucky enough to try my hand at something new to me. Throughout my career my work has consisted almost entirely of writing code, designing solutions, and managing teams (or teams of teams).

Last week I took a small group of techies through a 4 day long Introduction To C# course, covering some basics – such as types and members – through some pretty advanced stuff (in my opinon) – such as multicast delegates and anonymous & lamba methods (consider that the class had not coded in C# before the Monday, and by Tuesday afternoon we were covering pointers to multiple, potentially anonymous, functions).

I also had an extra one on one session on the Friday to help one of the guys from the 4 day course get a bit of an ASP.Net knowledge upgrade in order to get through a SiteCore course and exam.

I’d not done anything similar to this previously, so was a little nervous – not much though, and not even slightly after the first day was over. Public speaking is something that you can easily overcome; I used to be terrified but now you can’t shut me up, even in front of a hundred techies…

The weekend prior to the course starting I found myself painstakingly researching things that have, for almost a decade, been things I “just knew”. I picked up .Net by joining a company that was using it (VB.Net at the time) and staying there for over 5 years. I didn’t take any “Intro” courses as I didn’t think I needed to; I understood the existing code just fine and could develop stuff that seemed to suit the current paradigm, so I must be fine.. right?

The weekend of research tested my exam cram ability (being able to absorb a huge amount of info and process it in a short amount of time!) as I finally learned things that I’ve been just doing for over 8 years. Turns out a lot of stuff I could have done a lot better if I had the grounding that the course attendees, and now I, have.

Each evening I’d get home, mentally exhausted from trying to pull together the extremely comprehensive information on the slides with both my experiences and my research, trying to end up with cohesive information which the class would understand and be able to use. That was one of the hard parts.

Every evening I’d have to work through what I had planned to cover the next day and if there was anything I was even slightly unsure of I’d hit the googles and stackoverflows until I had enough information to fully comprehend that point in such a way I could explain it to others – potentially from several perspectives, and with pertinent examples, including coming up with a few quick “watch me code” lines.

Once I’d got all of the technical info settled in my noggin, then came the real challenge; trying to make this expansive course relevant to each attendee. A couple of them were learning C# in order to learn ASP.Net so that they can move into .Net web development, whilst one was mainly learning to support and develop winforms apps. Also each one was absorbing and processing the information at a different speed, and one even had to leave for one day as he needed to support a production issue, then returned a day later! How do you deal with that gap in someone’s knowledge and make it all relevant without duplicating sections for the others?

I’m booked in to lead an Advanced C# course next month and an ASP.Net one the month after, plus I’m looking at the MVC course at some point. All whilst working for a startup at the same time (more on that soon)! 2014 is going to be EPIC. It already is, actually..

I’m sure others could, and (since I’ve heard about people who do this) would, blag it if there was something they didn’t know, since – hey, these attendees aren’t going to be able to correct me are they?! This is an Intro course!

That’s obviously lame, but for a reason in addition to the one you would imagine; you’re cheating yourself if you do that. I have learned SO MUCH more information to surround my existing experience that I can frame all coding decisions that much better. Forget committing Design Patterns to memory if you don’t know what an event actually is. Sure, it’s basic, but it’s also fundamental.

Teaching is hard.

I like it.

You might too.

Last episode I introduced the concept of utilising RazorEngine and RazorMachine to generate html files from cshtml Razor view files and json data files, without needing a hosted ASP.Net MVC website.

We ended up with a teeny ikkle console app which could reference a few directories and spew out some resulting html.

This post will build on that concept and use Azure Blob Storage with a worker role.

We already have a basic RazorEngine implementation via the RenderHtmlPage class, and that class uses some basic dependency injection/poor man’s IoC to abstract the functionality to pass in the Razor view, the json data, and the output location.

The preview implementation of the IContentRepository interface merely read from the filesystem:

[csharp]namespace CreateFlatFileWebsiteFromRazor

{

internal class FileSystemContentRepository : IContentRepository

{

private readonly string _rootDirectory;

private const string Extension = ".cshtml";

public FileSystemContentRepository(string rootDirectory)

{

_rootDirectory = rootDirectory;

}

public string GetContent(string id)

{

var result =

File.ReadAllText(string.Format("{0}/{1}{2}", _rootDirectory, id, Extension));

return result;

}

}

}

[/csharp]

A similar story for the IDataRepository file system implementation:

[csharp]namespace CreateFlatFileWebsiteFromRazor

{

internal class FileSystemDataRepository : IDataRepository

{

private readonly string _rootDirectory;

private const string Extension = ".json";

public FileSystemDataRepository(string rootDirectory)

{

_rootDirectory = rootDirectory;

}

public string GetData(string id)

{

var results =

File.ReadAllText(string.Format("{0}/{1}{2}", _rootDirectory, id, Extension));

return results;

}

}

}

[/csharp]

Likewise for the file system implemention of IUploader:

[csharp]namespace CreateFlatFileWebsiteFromRazor

{

internal class FileSystemUploader : IUploader

{

private readonly string _rootDirectory;

private const string Extension = ".html";

public FileSystemUploader(string rootDirectory)

{

_rootDirectory = rootDirectory;

}

public void SaveContentToLocation(string content, string location)

{

File.WriteAllText(

string.Format("{0}/{1}{2}", _rootDirectory, location, Extension), content);

}

}

}

[/csharp]

All pretty simple stuff.

All I’m doing here is changing those implementations to use blob storage instead. In order to do this it’s worth having a class to wrap up the common functions such as getting references to your storage account. I’ve given mine the ingenious title of BlobUtil:

[csharp]class BlobUtil

{

public BlobUtil(string cloudConnectionString)

{

_cloudConnectionString = cloudConnectionString;

}

private readonly string _cloudConnectionString;

public void SaveToLocation(string content, string path, string filename)

{

var cloudBlobContainer = GetCloudBlobContainer(path);

var blob = cloudBlobContainer.GetBlockBlobReference(filename);

blob.Properties.ContentType = "text/html";

using (var ms = new MemoryStream(Encoding.UTF8.GetBytes(content)))

{

blob.UploadFromStream(ms);

}

}

public string ReadFromLocation(string path, string filename)

{

var blob = GetBlobReference(path, filename);

string text;

using (var memoryStream = new MemoryStream())

{

blob.DownloadToStream(memoryStream);

text = Encoding.UTF8.GetString(memoryStream.ToArray());

}

return text;

}

private CloudBlockBlob GetBlobReference(string path, string filename)

{

var cloudBlobContainer = GetCloudBlobContainer(path);

var blob = cloudBlobContainer.GetBlockBlobReference(filename);

return blob;

}

private CloudBlobContainer GetCloudBlobContainer(string path){

var account = CloudStorageAccount.Parse(_cloudConnectionString);

var cloudBlobClient = account.CreateCloudBlobClient();

var cloudBlobContainer = cloudBlobClient.GetContainerReference(path);

return cloudBlobContainer;

}

}

[/csharp]

This means that the blob implementations can be just as simple.

Just connect to the configured storage account, and read form the specified location to get the Razor view:

[csharp]class BlobStorageContentRepository : IContentRepository

{

private readonly BlobUtil _blobUtil;

private readonly string _contentRoot;

public BlobStorageContentRepository(string connectionString, string contentRoot)

{

_blobUtil = new BlobUtil(connectionString);

_contentRoot = contentRoot;

}

public string GetContent(string id)

{

return _blobUtil.ReadFromLocation(_contentRoot, id + ".cshtml");

}

}

[/csharp]

Pretty much the same as above, except with a different “file” extension. Blobs don’t need file extensions, but I’m just reusing the same files from before.

[csharp]public class BlobStorageDataRespository : IDataRepository

{

private readonly BlobUtil _blobUtil;

private readonly string _dataRoot;

public BlobStorageDataRespository(string connectionString, string dataRoot)

{

_blobUtil = new BlobUtil(connectionString);

_dataRoot = dataRoot;

}

public string GetData(string id)

{

return _blobUtil.ReadFromLocation(_dataRoot, id + ".json");

}

}

[/csharp]

The equivalent for saving it is similar:

[csharp]class BlobStorageUploader : IUploader

{

private readonly BlobUtil _blobUtil;

private readonly string _outputRoot;

public BlobStorageUploader(string cloudConnectionString , string outputRoot)

{

_blobUtil = new BlobUtil(cloudConnectionString);

_outputRoot = outputRoot;

}

public void SaveContentToLocation(string content, string location)

{

_blobUtil.SaveToLocation(content, _outputRoot, location + ".html");

}

}

[/csharp]

And tying this together is a basic worker role which looks all but identical to the console app:

[csharp]public override void Run()

{

var cloudConnectionString =

CloudConfigurationManager.GetSetting("Microsoft.Storage.ConnectionString");

IContentRepository content =

new BlobStorageContentRepository(cloudConnectionString, "content");

IDataRepository data =

new BlobStorageDataRespository(cloudConnectionString, "data");

IUploader uploader =

new BlobStorageUploader(cloudConnectionString, "output");

var productIds = new[] { "1", "2", "3", "4", "5" };

var renderer = new RenderHtmlPage(content, data);

foreach (var productId in productIds)

{

var result = renderer.BuildContentResult("product", productId);

uploader.SaveContentToLocation(result, productId);

}

}

[/csharp]

By setting the output container to be public, the html files can be browsed to directly; we’ve just created an auto-generated flat file website. You could have the repository implementations access the local file system and the console app access blob storage; generate the html locally but store it remotely where it can be served from directly!

Given that we’ve already created the RazorEngine logic, the implementations of content location are bound to be simple. Swapping file system for blob storage is a snap. Check out the example code over on github

There’s a few more stages in this master plan, and following those I’ll swap some stuff out to extend this some more.