This article assumes that you know the basics of AWS, WebPageTest, SSH, and at least one linux text editor.

When talking to people about website performance stats, I’ll usually split it into Real User Metrics (RUM) and Automated (Synthetic/Test Lab):

- RUM is performance data reported from the website you own, reported into the analytics tool you have integrated.

- Automated are scripted tests that you run from your own performance testing tool against any website you like.

RUM is great: you get real performance details from real user devices and can investigate the difference in performance for many different options.

For example, iPhone vs Android, Mac vs Windows, Mobile vs Desktop, Chrome vs Firefox, UK vs US, even down to ISP and connection type, in order to see who is getting a good experience and who can be improved.

This data is invaluable in prioritising performance improvements, since you can tie it back to the approximate number of users it will affect, and therefore the impact on your business.

There are loads of vendors who can provide this for you (I’ve used many of them), or you can write your own – if you’re a glutton for punishment (and high AWS bills – ask me how I know…😁)

However, since this is measured on your own website and reported into your own tooling, you can only see such real-world performance detail for your own website; no real-world user experience data from your competitors.

Automated tests are great: you get detailed measurements of any website you can access – your own or competitors, or basically any website – in a repeatable fashion so that you can track changes over time.

You can have as many automated tests as you like, you can test from wherever you’re able to set up a test agent, and with whatever device you’re able to automate or emulate.

However, since these are all automated tests running because you said so, you can’t use them to understand how users are using your site, on which devices, what devices are underperforming others, and from where.

Again, there are a load of vendors who can provide this for you; writing your own is a bit more of a headache though – I wouldn’t recommend it, especially while wpt continues to be free for self hosting.

What if you could get some of the usual key performance metrics you’re used to with RUM, but for sites you don’t own such as your competitors?

In this article I’ll talk about the Google Chrome User Experience dataset and how to use it in your performance test setup to find the intersection of RUM and Automated performance test results, wiring it all up into your WebPageTest setup!

When you install Google Chrome, you’re asked if you’re happy to share anonymous usage statistics with Google. Included in these are performance measurements from every site you visit, sent anonymously to the Big G.

"The Chrome User Experience Report is powered by real user measurement of key user experience metrics across the public web, aggregated from users who have opted-in to syncing their browsing history, have not set up a Sync passphrase, and have usage statistic reporting enabled."

The metrics measured include standard browser APIs, such as Network, Notification, and Paint Timing; plus the usual page events like onload and DOMContentLoaded. Since this is Chrome, you’ll also get the Core Web Vitals, also known as Largest Contentful Paint, First Input Delay, and Cumulative Layout Shift.

Core Web Vitals are currently "experimental" and have limited support; at time of writing you’re looking at Chrome, Edge, Opera, and Samsung Internet only.

All of these stats are pushed to Google, aggregated, and then made available for querying and even in a really handy Data Studio dashboard. This is where it gets interesting; it’s measured in the same way that RUM is measured – real users data in real browser – but it’s available for any web page that gets a certain amount of traffic in a given time period.

As such, CrUX could be considered an intersection of RUM and Automated performance testing; you can see aggregated performance data for real users on both your site, and on other sites – such as competitors. You can query the CrUX API to pull this data down and track specific sites or even specific pages (assuming they get enough traffic to appear in the data set) for you and your competitors, seeing how real users experience these sites compared to each other over time.

What’s the easiest way to make use of this VITAL information? I’m glad you asked.

CrUX on Public WebPageTest

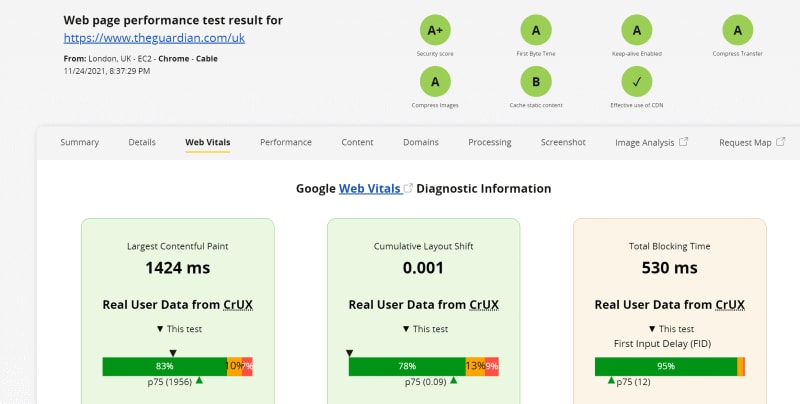

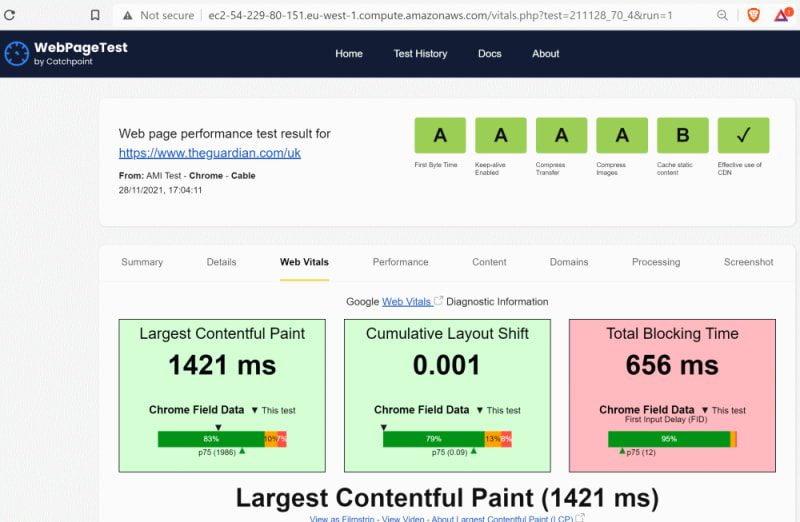

Head over to WebPageTest.org and kick off a test for a popular site – CrUX data only exist for pages that get a reasonable amount of traffic (so there’s no point using my website😁); maybe a well known media website.

Check that out! It’s front and centre, right in your face. You can see how real users have experienced the same page that you tested; the distribution of traffic that get Good, Needs Improvement, and Poor experience in each metric; and where the current test would sit on that same scale.

Very clever, right? Would you like the same thing on your own WebPageTest Private Instance? Sure you would. I mean who wouldn’t?

CrUX on Private WebPageTest

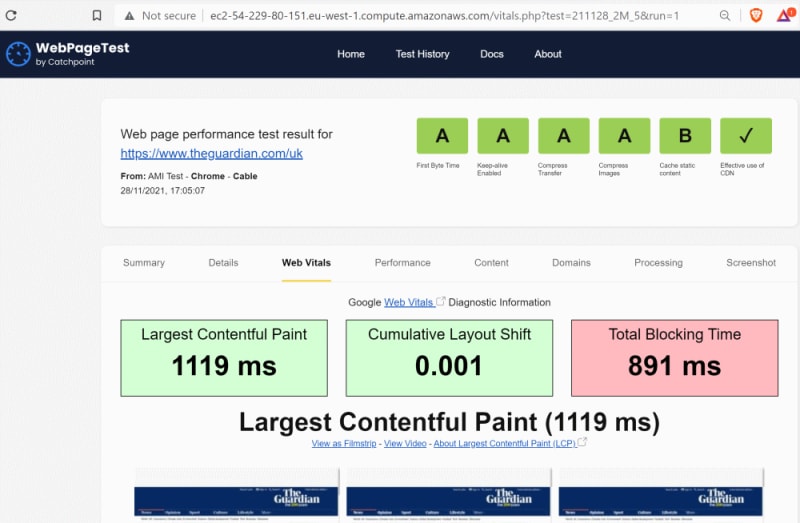

By default, the private WebPageTest doesn’t have such CrUX goodness (also the UI isn’t as up to date as the public version – booo!)

However, we can fix this!

Ingredients

- Private WebPageTest setup

- CrUX API key

- SYNERGISE FOR WINS

Recipe

1) Private WebPageTest Setup

You have a private WebPageTest instance configured already, right? If not, have I got a great article for you! Follow that through then come back here.

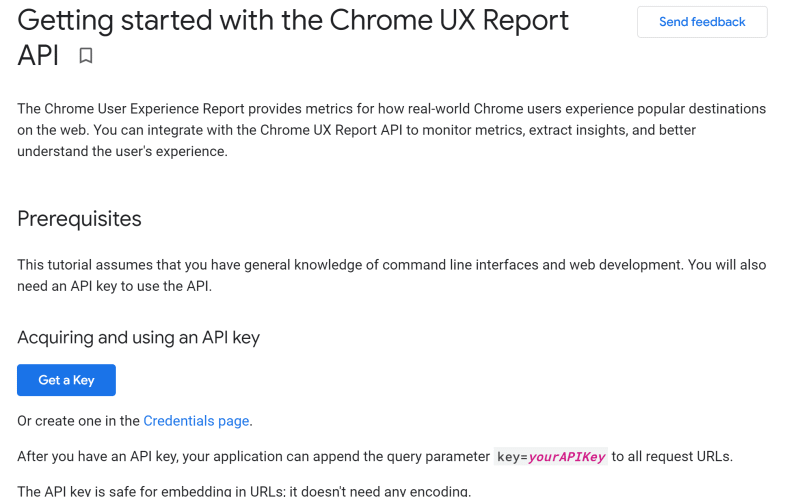

2) CrUX API Key

Head over to the CrUX API guide and hit that big "Get a key" button.

Follow the instructions and copy the resulting API key someplace safe.

3) SYNERGISATION

Log in to your private WPT instance, head over to settings/common/settings.ini and add in a new line at the end:

...

crux_api_key=<your CrUX API key goes here>

...Save that file (maybe kick off a sudo service nginx restart just to be sure) and kick off a new test – check out that new CrUX goodness!

Pretty cool, huh? Now your WebPageTest results for both you and your competitors (and anyone else you like) will have a sampling of real user data, in the form of CrUX Core Web Vitals (assuming that domain exists in the CrUX dataset, which – although large – does not contain every site).

Nice.