While working at Burberry I had a few opportunities to work directly with some of the lovely people at Google, focussing on site speed, resilience, and improving the user experience.

Working with Google representatives, we organised a series of fascinating hackathon events relating to improving perceived performance, and not losing an offline user.

Perception of speed

These sessions started with an introduction for that hackathon’s theme; for example, a “Perceived Performance” session described the 2009 study at an airport in Texas where complaints were abnormally high for a baggage delivery time that was below the average wait time – the cause eventually was discovered to be due to the arrival terminal being only 1 minute from the baggage claim area, so although passengers were fast to disembark the plane and get through the terminal, they were then waiting around for their luggage.

The solution that most improved passenger happiness was to increase the distance between the arrival terminal and baggage reclaim, such that, although the delay between arriving and receiving baggage was largely the same, it was spent actively moving; passengers were not feeling like they were wasting their time passively waiting around.

This was a great example of if you can’t make something actually fast, make it seem fast enough.

Offline (or is it?)

Some other interesting hacks were related to the user being offline; something that is more common that teams might expect. Mobile traffic has soared over the past few years, leaving desktop traffic in its wake.

One huge difference between desktop and mobile is the connectivity: WiFi / ethernet is more reliable than traversing the physical space that makes up a mobile network: jumping between cell towers, moving through built up areas and underground transport dead zones.

The default website reaction to this would be a browser error page; however, given how often a mobile user might see this on your site, it’s certainly worth considering mitigations.

The first hack to mention was one where I suggested a couple of new engineers attend; surprised at the invite, they asked what should they do?!

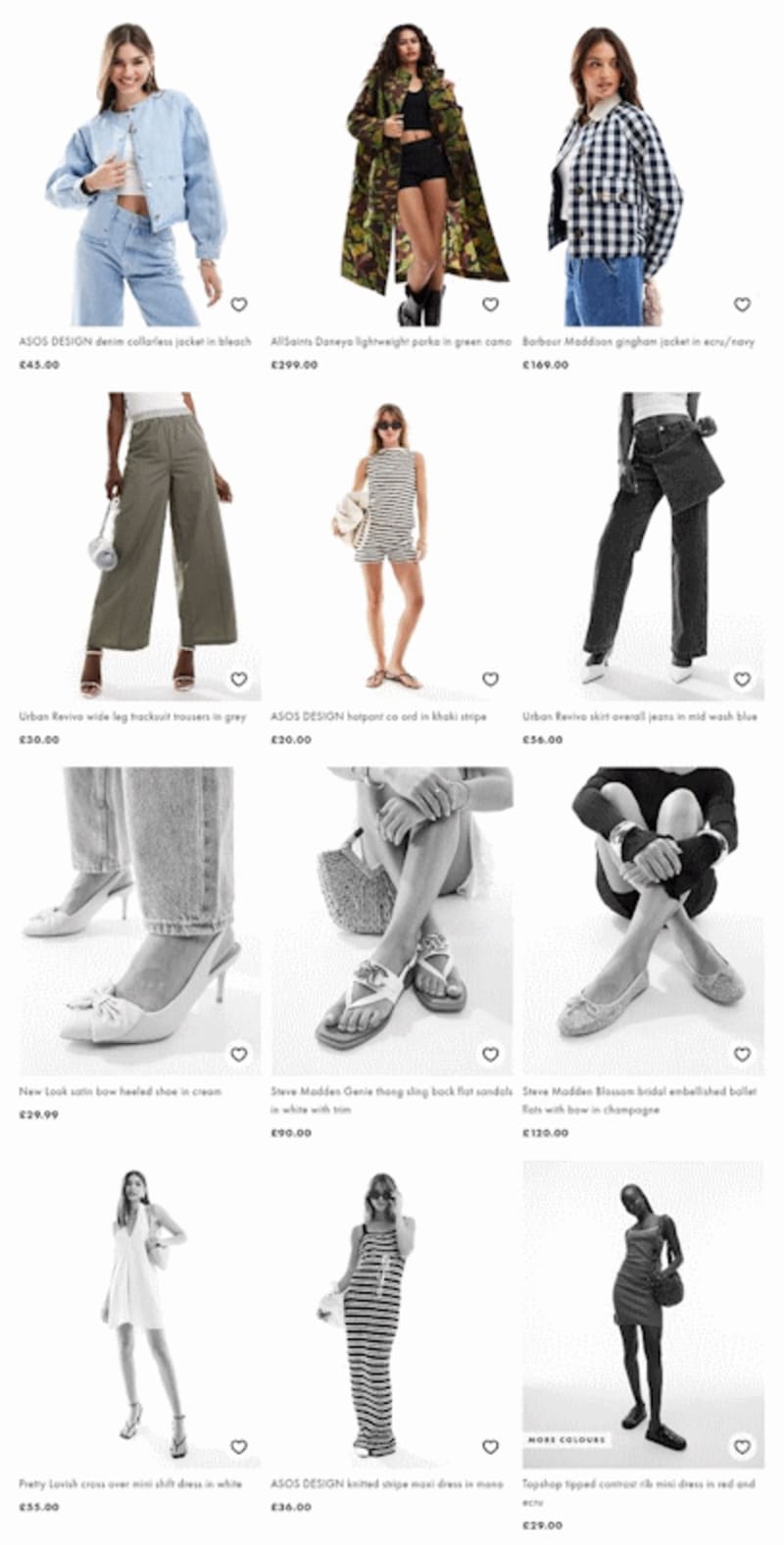

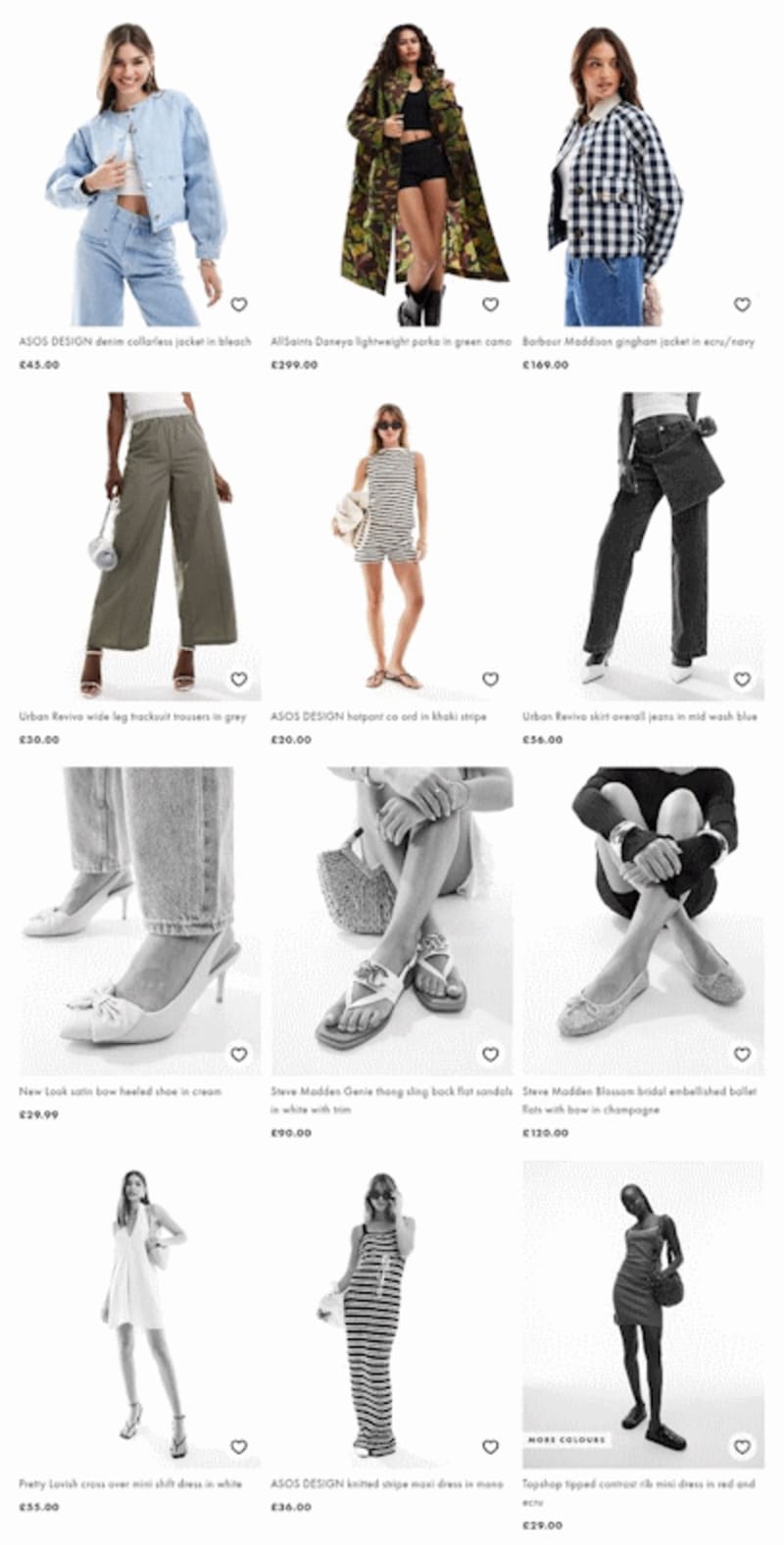

My suggestion was to implement client side caching via a service worker for any visited product detail page (PDP), and alter the product listing page (PLP) to greyscale any images of products that were not in the cache when the user is offline; this allows the user to still hop between listing page and detail pages for products they’ve already seen – which they can clearly identify – until they’re back online.

(Mock up of how a “greyscale if not cached” product listing page could look)

This suggestion was implemented beautifully, and that team won the multi company hackathon! Go team!

The second mentionable hack, although developed at a much later hackathon, would turn out to be complimentary to the first.

By utilising a service worker and custom javascript (background sync doesn’t work in Safari, which was the biggest mobile market for ecommerce), the user could still add products to their basket while offline, synchronising the basket automatically once connection is restored. This was slick, and using it by default could avoid annoying “wait, did that add to my bag? Should I click again?” situations. (I did a talk about Optimistic UI at some point also, which relates to this)

This could potentially be progressively enhanced further to simplify and use the new Periodic Background Sync API, or the WebWorker Background Sync API for browsers that support them. I’ll leave it as an exercise for the reader! Fancy trying it?

Being able to involve a large subset of the digital department – including all roles, not just engineers – was fascinating. Getting everyone on shared context, and seeing what is actually possible in browsers with a relatively small amount of code and effort, was an eye opener for many.

If you are unsure the value of a hackathon, or not sure how to run one, contact someone who has done it before to help define desired outcomes, set the agenda, and help it go smoothly. The lovely people at Google did this beautifully for us at Burberry, and I’m happy to advise also!