At Mailcloud I am constantly destroying and rebuilding environments (intentionally!), especially the performance test ones. I also need to gather oodles of metrics from these tests, and I have a simple script to create the VM and another to install all of the tools I need.

This article will cover using a simple Powershell script to create the Linux VM and then a slightly less simple bash script to install all the goodies. It’s not as complicated as it may look, and considering you can get something running in a matter of minutes from a couple of small scripts I think it’s pretty cool!

Stage 1, Creating the VM using Powershell

Set up the variables

# os configuration

# Get-AzureVMImage | Select ImageName

# I wanted an ubuntu 14.10 VM:

$ImageName = "b39f27a8b8c64d52b05eac6a62ebad85__Ubuntu-14_10-amd64-server-20140625-alpha1-en-us-30GB"

# account configuration

$ServiceName = "your-service-here"

$SubscriptionName= "your azure subscription name here"

$StorageAccount = "your storage account name here"

$Location = "your location here"

# vm configuration - setting up ssh keys is better, username/pwd is easier.

$user = "username"

$pwd = "p@ssword"

# ports

## ssh

$SSHPort = 53401 #set something specific for ssh else powershell generates a random one

## statsd

$StatsDInputPort = 1234

$StatsDAdminPort = 5678

## elasticsearch

$ElasticSearchPort = 12345

Get your Azure subscription info

Set-AzureSubscription -SubscriptionName $SubscriptionName `

-CurrentStorageAccountName $StorageAccount

Select-AzureSubscription -SubscriptionName $SubscriptionName

Create the VM

Change “Small” to one of the other valid instance sizes if you need to.

New-AzureVMConfig -Name $ServiceName -InstanceSize Small -ImageName $ImageName `

| Add-AzureProvisioningConfig –Linux -LinuxUser $user –Password $pwd -NoSSHEndpoint `

| New-AzureVM –ServiceName $ServiceName -Location $Location

Open the required ports and map them

Get-AzureVM -ServiceName $ServiceName -Name $ServiceName `

| Add-AzureEndpoint -Name "SSH" -LocalPort 22 -PublicPort $SSHPort -Protocol tcp `

| Add-AzureEndpoint -Name "StatsDInput" -LocalPort 8125 -PublicPort $StatsDInputPort -Protocol udp `

| Add-AzureEndpoint -Name "StatsDAdmin" -LocalPort 8126 -PublicPort $StatsDAdminPort -Protocol udp `

| Add-AzureEndpoint -Name "ElasticSearch" -LocalPort 9200 -PublicPort $ElasticSearchPort -Protocol tcp `

| Update-AzureVM

Write-Host "now run: ssh $serviceName.cloudapp.net -p $SSHPort -l $user"

The whole script is in a gist here

Stage 1 complete

Now we have a shiny new VM running up in Azure, so let’s configure it for gathering metrics using a bash script.

Stage 2, Installing the metrics software

You could probably have the powershell script automatically upload and execute this, but it’s no big deal to SSH in, “sudo nano/vi” a new file, paste it in, chmod, and execute the below.

Set up the prerequisites

# Prerequisites

echo "#### Starting"

echo "#### apt-get updating and installing prereqs"

sudo apt-get update

sudo apt-get install screen libexpat1-dev libicu-dev git build-essential curl -y

Install nodejs

# Node

echo "#### Installing node"

. ~/.bashrc

export "PATH=$HOME/local/bin:$PATH"

mkdir $HOME/local

mkdir $HOME/node-latest-install

pushd $HOME/node-latest-install

curl http://nodejs.org/dist/node-latest.tar.gz | tar xz -strip-components=1

./configure -prefix=~/local

make install

popd

## the path isn't always correct, so set up a symlink

sudo ln -s /usr/bin/nodejs /usr/bin/node

## nodemon

echo "#### npming nodemon"

sudo apt-get install npm -y

sudo npm install -g nodemon

Add StatsD

Here I’ve configured it to use mongo-statsd-backend as the only backend and not graphite. Configuring Graphite is a PAIN as you have to set up python and a web server and deal with all the permissions, etc. Gah.

# StatsD

echo "#### installing statsd"

pushd /opt

sudo git clone https://github.com/etsy/statsd.git

cat >> /tmp/localConfig.js << EOF

{

port: 8125

, dumpMessages: true

, debug: true

, mongoHost: 'localhost'

, mongoPort: 27017

, mongoMax: 2160

, mongoPrefix: true

, mongoName: 'statsD'

, backends: ['/opt/statsd/mongo-statsd-backend/lib/index.js']

}

EOF

sudo cp /tmp/localConfig.js /opt/statsd/localConfig.js

popd

Mongo and a patched mongo-statd-backend

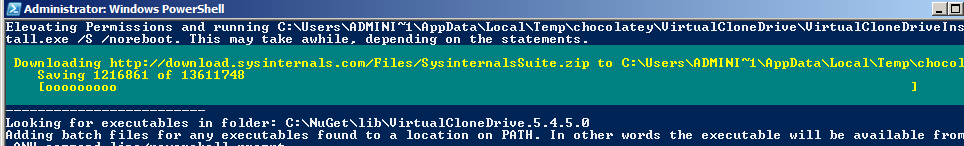

You could use npm to install mongo-statsd-backend, but that version has a few pending pull requests to patch a couple of issues that mean it doesn’t work out of the box. As such, I use my own patched version and install from source.

# MongoDB

echo "#### installing mongodb"

sudo apt-key adv -keyserver hkp://keyserver.ubuntu.com:80 -recv 7F0CEB10

echo 'deb http://downloads-distro.mongodb.org/repo/ubuntu-upstart dist 10gen' | sudo tee /etc/apt/sources.list.d/mongodb.list

sudo apt-get update && sudo apt-get install mongodb-org -y

sudo service mongod start

cd /opt/statsd

## Mongo Statsd backend - mongo-statsd-backend

## the version on npm has issues; use a patched version on github instead:

sudo git clone https://github.com/rposbo/mongo-statsd-backend.git

cd mongo-statsd-backend

sudo npm install

Ready to start?

Let’s kick off a screen

# Start StatsD

screen nodemon /opt/statsd/stats.js /opt/statsd/localConfig.js

Fancy getting ElasticSearch in there too?

To pull down and install the java runtime, install ES and the es-head, kopf, and bigdesk plugins, add the below script just before you kick off the “screen” command.

# ElasticSearch

echo "#### installing elasticsearch"

sudo apt-get update && sudo apt-get install default-jre default-jdk -y

wget https://download.elasticsearch.org/

elasticsearch/elasticsearch/elasticsearch-1.1.1.deb && sudo dpkg -i elasticsearch-1.1.1.deb

sudo update-rc.d elasticsearch defaults 95 10

sudo /etc/init.d/elasticsearch start

## Elasticsearch plugins

sudo /usr/share/elasticsearch/bin/plugin -install mobz/elasticsearch-head

sudo /usr/share/elasticsearch/bin/plugin -install lukas-vlcek/bigdesk

You can now browse to /_plugin/bigdesk (or the others) on the public $ElasticSearchPort port you configured in the powershell script to see your various ES web interfaces.

The whole script is in a gist here.

Stage 2 complete

I use StatsD to calculate a few bits of info around the processing of common tasks, in order to find those with max figures that are several standard deviations away from the average and highlight them as possible areas of concern.

I have an Azure Worker Role to pull azure diagnostics from table and blob storage and spew it into the Elasticsearch instance for easier searching; still figuring out how to get it looking pretty in a Grafana instance though – I’ll get there eventually.